So-called “emergent” behavior in LLMs may not be the breakthrough that researchers think.

TLDR: Let’s say you want to teach an LLM a new skill. You give them training data pertaining to that skill. Currently, researchers believe that this skill development shows up suddenly in a breakthrough fashion. They think so because they measure this skill using some methods. The skill levels remain very low until they unpredictably jump up like crazy. This is the “breakthrough”.

BUT, the paper that this article references points at flaws in the methods of measuring skills. This paper suggests that breakthrough behavior doesn’t really exist and skill development is actually quite predictable.

Also, uhhh I’m not AI (I see that TLDR bot lurking everywhere, which is what made me specify this).

Also, uhhh I’m not AI

An AI would say that… 😂

Clearly, the AI is learning deception

re: your last point, AFAIK, the TLDR bot is also not AI or LLM; it uses more classical NLP methods for summarization.

https://github.com/RikudouSage/LemmyAutoTldrBot readme say summarization is in summarizer.py which use sumy, specifically LSA which documented here

Natural language processing falls under AI though, and so do large language models (see chapters 23 and 24 of Russell and Norvig, 2021 http://aima.cs.berkeley.edu/).

Also, uhhh I’m not AI

That’s exactly what an AI would say that got an emergent skill to lie

🤥

Or a model that picked up on a pattern of sources saying that.

What always irks me about those “emergent behavior” articles: no one ever really defines what those amazing"skills" are supposed to be.

The term “emergent behavior” is used in a very narrow and unusual sense here. According to the common definition, pretty much everything that LLMs and similar AIs do is emergent. We can’t figure out what a neural net does by studying its parts, just like we can’t figure out what an animal does by studying its cells.

We know that bigger models perform better in tests. When we train bigger and bigger models of the same type, we can predict how good they will be, depending on their size. But some skills seem to appear suddenly.

Think about someone starting to exercise. Maybe they can’t do a pull-up at first, but they try every day. Until one day they can. They were improving the whole time in the various exercises they did, but it could not be seen in this particular thing. The sudden, unpredictable emergence of this ability is, in a sense, an illusion.

For a literal answer, I will quote:

Emergent behavior is pretty much anything an old model couldn’t do that a new model can. Simple reasoning, creating coherent sentences, “theory of mind”, basic math, translation, I think are a few examples.

They aren’t “amazing” in the sense that a human can’t do them, but they are in the sense that a computer is doing it.

They aren’t “amazing” in the sense that a human can’t do them, but they are in the sense that a computer is doing it.

… without specifically being trained for it, to be precise.

One of those things I remember reading was the ability of ChatGPT to translate texts. It was trained with texts in multiple languages, but never translation specifically. Still, it’s quite good at it.

That is just its core function doing its thing transforming inputs to outputs based on learned pattern matching.

It may not have been trained on translation explicitly, but it very much has been trained on these are matching stuff via its training material. Since you know what its training set most likely contained… dictionaries. Which is as good as asking it to learn translation. Another stuff most likely in training data: language course books, with matching translated sentences in them. Again well you didnt explicitly tell it to learn to translate, but in practice the training data selection did it for you.

The data is there, but simpler models just couldn’t do it, even when trained with that data.

Bilingual human children also often can’t translate between their two (or more) native languages until they get older.

That’s interesting. My trilingual kids definitely translate individual words, but I guess the real bar here is to translate sentences such that the structure is correct for the languages?

A lot of the training set was probably Wiktionary and Wikipedia which includes translations, grammar, syntax, semantics, cognates, etc.

I’m not sure why they are describing it as “a new paper” - this came out in May of 2023 (and as such notably only used GPT-3 and not GPT-4, which was where some of the biggest leaps to date have been documented).

For those interested in the debate on this, the rebuttal by Jason Wei (from the original emergent abilities paper and also the guy behind CoT prompting paper) is interesting: https://www.jasonwei.net/blog/common-arguments-regarding-emergent-abilities

In particular, I find his argument at the end compelling:

Another popular example of emergence which also underscores qualitative changes in the model is chain-of-thought prompting, for which performance is worse than answering directly for small models, but much better than answering directly for large models. Intuitively, this is because small models can’t produce extended chains of reasoning and end up confusing themselves, while larger models can reason in a more-reliable fashion.

If you follow the evolution of prompting in research lately, there’s definitely a pattern of reliance on increased inherent capabilities.

Whether that’s using analogy to solve similar problems (https://openreview.net/forum?id=AgDICX1h50) or self-determining the optimal strategy for a given problem (https://arxiv.org/abs/2402.03620), there’s double digit performance gains in state of the art models by having them perform actions that less sophisticated models simply cannot achieve.

The compounding effects of competence alone mean that progress here isn’t going to be a linear trajectory.

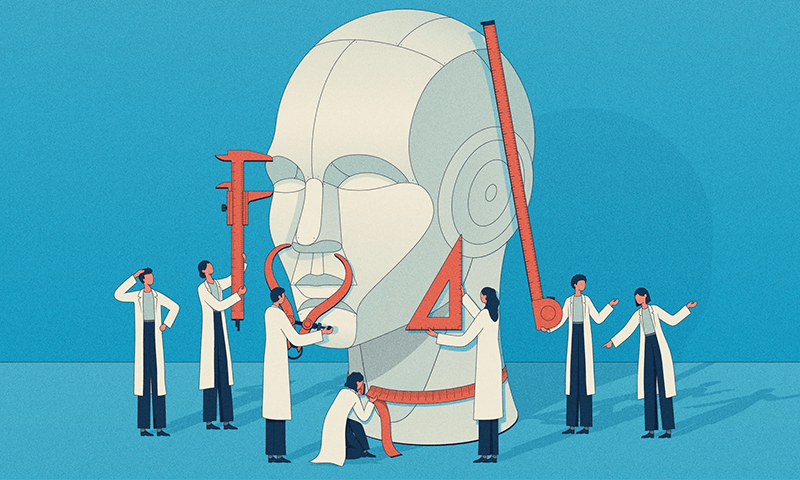

what material benefit does having a cutesy representation of phrenology, a pseudoscience used to justify systematic racism, bring to this article or discussion?