It looks like there are instructions here about hosting your own flatpak instance: https://docs.flatpak.org/en/latest/hosting-a-repository.html

I made LASIM! https://github.com/CMahaff/lasim

I currently have 3 accounts (big shock):

- 3 Posts

- 22 Comments

2·2 months ago

2·2 months agoDoubling what Klaymore said, I’ve seen this “just work” as long as all partitions have the same password, no key files necessary.

That said, if you needed to use a key file for some reason, that should work too, especially if your root directory is one big partition. Keep in mind too that the luks commands for creating a password-based encrypted partition vs a keyfile-based encrypted partition are different, so you can’t, for example, put your plaintext password into a file and expect that to unlock a LUKS partition that was setup with a password.

But the kernel should be trying to mount your root partition first at boot time where it will prompt for the password. After that it would look to any /etc/crypttab entries for information about unlocking the other partitions. In that file you can provide a path to your key file, and as long as it’s on the same partition as the crypttab it should be able to unlock any other partitions you have at boot time.

It is also possible, as one of your links shows, to automatically unlock even the root partition by putting a key file and custom /etc/crypttab into your initramfs (first thing mounted at boot time), but it’s not secure to do so since the initramfs isn’t (and can’t be) encrypted - it’s kind of the digital equivalent of hiding the house key under the door mat.

Another solution to this situation is to squash your changes in place so that your branch is just 1 commit, and then do the rebase against your master branch or equivalent.

Works great if you’re willing to lose the commit history on your branch, which obviously isn’t always the case.

Sounds like a problem with Memmy. Does this link work? https://lemm.ee/c/sfah@hilariouschaos.com

You should be able to search communities in your app and could have searched “sfah@hilariouschaos.com” too.

But basically communities on Lemmy are in the form of “name@host”. The “name” can be whatever someone wants, and the “host” is the website / Lemmy instance where that community originates from. But because it is federated it’s all available everywhere (generally speaking). For example, if you visit https://lemmy.world/c/sfah@hilariouschaos.com it should be the same content just loaded via lemmy.world instead of lemme.ee. However if theoretically someone went and made a “sfah@lemmy.world” community, that would be a completely separate community from the above, hosted on a different Lemmy instance.

6·3 months ago

6·3 months agoI know for me, at least with gnome, toggling between performance, balanced, and battery saver modes dramatically changes my battery life on Ubuntu, so I have to toggle it manually to not drain my battery life if it’s mostly sitting there. I don’t know if Mint is the same, but just throwing out the “obvious” for anyone else running Linux on a laptop.

4·6 months ago

4·6 months agoOut of curiosity, what switch are you using for your setup?

Last time I looked, I struggled to find any brand of “home tier” router / switch that supported things like configuring vlans, etc.

1·6 months ago

1·6 months agoMaybe I am not thinking of the access control capability of VLANs correctly (I am thinking in terms of port based iptables: port X has only incoming+established and no outgoing for example).

I think of it like this: grouping several physical switch ports together into a private network, effectively like each group of ports is it’s own isolated switch. I assume there are routers which allows you to assign vlans to different Wi-Fi access points as well, so it doesn’t need to be literally physical.

Obviously the benefits of vlans over something actually physical is that you can have as many as you like, and there are ways to trunk the data if one client needs access to multiple vlans at once.

In your setup, you may or may not benefit, organizationally. Obviously other commenters have pointed out some of the security benefits. If you were using vlans I think you’d have at a minimum a private and public vlan, separating out the items that don’t need Internet access from the Internet at all. Your server would probably need access to both vlans in that scenario. But certainly as you say, you can probably accomplish a lot of this without vlans, if you can aggressively setup your firewall rules. The benefit of vlans is you would only really need to setup firewall rules on whatever vlan(s) have Internet access.

3·6 months ago

3·6 months agoI loved the original Hades, but I played it after it left Early Access.

It’s going to be really hard to resist jumping in early with Hades II.

4·10 months ago

4·10 months agoI ran into the same thing. I’ve always just worked around it, but I believe I did find the solution at one point (can’t find the link now).

But if I am remembering right, I believe you need to manually create a bridge between the two networks - by default it isolates the VMs from TrueNAS itself for security reasons.

Sorry I can’t link the exact fix right now, but hopefully this will help you Google the post I found on the subject.

4·10 months ago

4·10 months agoSimple thing, but are you sure you mounted the NFS share as NFSv4? I don’t have access to a machine to check right now, but I think it might default to mounting NFSv3, even if both sides support v4.

4·11 months ago

4·11 months agoTo add on to this answer (which is correct):

Your “of” can also just be a regular file if that’s easier to work with vs needing to create a new partition for the copy.

I’ll also say you might want to use the block size parameter “bs=” on “dd” to speed things up, especially if you are using fast storage. Using “dd” with “bs=1G” will speed things up tremendously if you have at least >1GB of RAM.

To expand on this a bit,

git pullunder the hood is basically a shortcut forgit fetch(get the remote repository’s state) andgit merge origin/main main(merge to remote changes to your local branch, which for you is always main).When you have no local changes, this process just “makes a line” in your commit history (see

git log --graph --decorate), but when you have local changes and the remote has changed too, it has to put those together into a merge commit - think a diamond shape with the common ancestor at the bottom, the remote changes on one side, your changes on the other side, and the merge of the two at the top.Like the above comment says, normally this process is clarified at the command line - VSCode must be handling it automatically if there are no code conflicts.

7·1 year ago

7·1 year agoNot an expert myself, but I think chips that truly sip power not only have a much lower floor but take even more aggressive actions to reduce power when idle.

Certainly with the right software tuning you could aggressively throttle the CPU to save power - I’m just not sure how much power it would actually save.

I did find this really good article on reducing the Raspberry Pi Zero 2W power consumption: https://www.cnx-software.com/2021/12/09/raspberry-pi-zero-2-w-power-consumption/

30·1 year ago

30·1 year agoI saw this complaint in another post online (paraphrased):

The screen and use of a Pi seem at odds with each other. The screen is ultra-low power, but there are of course huge drawbacks for usability. Meanwhile the CPU is very powerful, but chews through, comparatively, a lot of power quickly.

They argued that it would be better to either pair the Pi with a better screen for a more powerful/usable handheld, or go all in on longevity and use some kind of low-power chip to pair with the screen for a terminal that could last for days.

… I’ve got to say, it’s a fair point. A low power hand-held that could run Linux and run for days would be pretty cool, even if it was underpowered compared to a Pi. No idea what you could use for such a thing though.

1·1 year ago

1·1 year agoYour Lemmy profile settings include an option to not show bot accounts.

1·1 year ago

1·1 year agoThis is now released :)

3·1 year ago

3·1 year agoFor anyone finding this in the future:

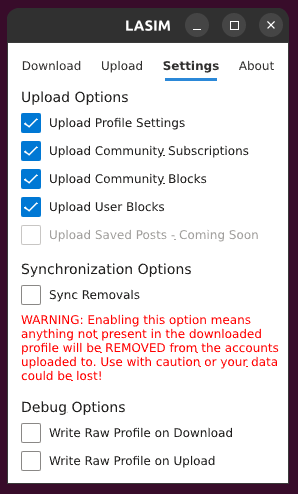

The latest version of LASIM (0.2.1) has a Settings tab that allows you to choose what you want to upload.

If you are using the JSON file posted above, you’d want to choose just “Upload Community Subscriptions” on this tab so that your profile settings, etc. are not changed.

2·1 year ago

2·1 year agoSneak peek :)

0·1 year ago

0·1 year agoI hadn’t even considered this use case for LASIM, but that’s really neat.

I’ve been thinking about a settings page where you can toggle what to sync, among a few other future features. I’ll definitely add an option in the future to NOT sync the profile settings.

More of a debugging step, but have you tried running

lsinitrdon the initramfs afterwards to verify your script actually got added?You theoretically could decompress the entire image to look around as well. I don’t know the specifics for alpine, but presumably there would be a file present somewhere that should be calling your custom script.

EDIT: Could it also be failing because the folder you are trying to mount to does not exist? Don’t you need a

mkdirsomewhere in your script?