LOL

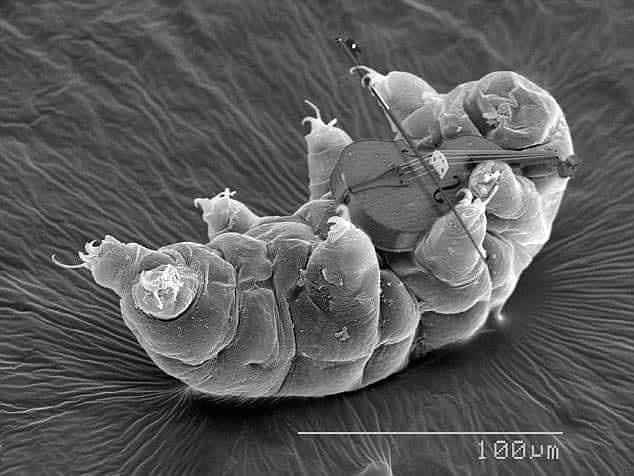

So the same people who have no problem about using other people’s copyrighted work, are now crying when the Chinese do the same to them? Find me a nano-scale violin so I can play a really sad song.

That’s obviously a cello.

Maybe a cello if it was human grade. But that’s tardigrade.

Pedant time: That’s microscale not nanoscale.

You can shoot me now, it’s deserved.

Can you put a liuqin in there?

Planck could not scale small enough.

You more elegantly said what I came to say.

Stealing from thieves is not theft

Yes it is. Although I personally have far less moral objections to it.

To elaborate:

OpenAI scraped data without permission, and then makes money from it.Deepseek then used that data (even paid openai for it), trained a model on that data, and then releases that model for anyone to use.

While it’s still making use of “stolen data” (that’s a whole semantics discussion I won’t get into right now), I find it far more noble than the former.

Came to say something similar. Like I give a fuck that OpenAI’s model/tech/whatever was “stolen” by Deepseek. Fuck that piece of shit Sam Altman.

“Recieving stolen goods” is prosecutable.

It’s a lesser crime than the original theft though.

Cry me a fucking river, David.

Bruh, these guys trained their own AI on so called “puplicly available” content. Except it was, and still is, completely without consent from, or compensation to said artists/bloggers/creators etc… Don’t throw rocks when you live in a glass house 🤌

Another reason why I use Andi, because it don¡t gut copyright content to the own knowledge base, it’s is a search assistant, not a chatbot like others, it search by the concept and give a direct answer to your question, listing also the links of the sources and pages where it found the answers. It’s LLM is only made to “understand” (to call it something) your question to search pages que contain information about it and to understand the content to be capable to summarize it. There isn’t third party or copyright content in the LLM. It’s knowledge is real time web content like any other search engine. Even in it’s (reduced) chat capabilities, always show the sources where it found it’s answers.

Traditional search works with keywords, listing thousends of pages where appears this keyword, that means that 99% of the list has nothing to do with what you are looking for, this is the reason why AI searches give a better result, but not Chatbots, which search the answers in a own knowledge base and invent answers if not.

Oh are we supposed to care about substantial evidence of theft now? Because there’s a few artists, writers, and other creatives that would like to have a word with you…

Womp womp. I’m sure openAI asked for permission from the creators for all its training data, right? Thief complains about someone else stealing their stolen goods, more at 11.

OpenAI’s mission statement is also in their name. The fact that they have a proprietary product that is not open source is criminal and should be sued out of existence. They are now just like the Sun Micro after Apache was made open sourced; irrelevant they just haven’t gotten the memo yet. No company can compete against the whole world.

i agree FOSS is the way to go, and that OpenAI has a lot to answer for… but FOSS is not the only way to interpret “open”

the “open” was never intended as open source - it was open access. the idea was that anyone should have access to build things using AI; that it shouldn’t be for only megacorps who had the pockets to train… which they have, and still are doing

they also originally intended that all their research and patents would be open, which i believe they’re still doing

Your point OpenAI? Weren’t you part of the group saying training AI wasn’t copyright infringement? Not so happy when it’s your shit being copied? Huh. Weird.

The only concern is how much the cost of training the model changes if it got a significant kickstart from previous, very-expensive training. I was interested because it was said to be comparable for a fraction of the cost. "Open"AI can suck sand.

so he’s just admitting that deepseek did a better job than openai but for a fraction of the price? it only gets better.

It’s funny that they did all that and open-sourced it too. Like some kid accusing another to copy their homeworks while the other kid did significantly better and also offered to share.

When you can’t win, accuse them of cheating.

But, but they committed the copyright infringement first. It’s theirs. That’s totally unfair. What are tech bros going to do? Admit they are grossly over valued? They’ve already spent the billions.

Me pretending to care about David Sacks claim:

Here’s the thing… It was a bubble because you can’t wall off the entire concept of AI. This revelation was just an acceleration displaying what should’ve been obvious.

There are many many open models available for people to fuck around with. I have in a homelab setting, just to keep abreast of what is going on, get a general idea how it works and what its capable of.

What most normie followers of AI don’t seem to understand is, whether you’re doing LLM or machine learning object detection or something, you can get open software that is “good enough” and run it locally. If you have a raspberry pi you can run some of this stuff, and it will be slow, but acceptable for many use cases.

So the concept that only OpenAI would ever hold the keys and should therefore have massive valuation in perpetuity, that is just laughable. This Chinese company just highlighted that you can bruteforce train more optimized models on garbage-tier hardware.

Yes, AI arrived to stay, that is fact, also soon there will be a unified global AI (see Stargat Project) which will make obsolet all other LLM. A $500 Billon project. The only thing we can do is to use it in the most intelligent way, avoiding it will be impossible.

I couldn’t give less of a fuck.

FUD, just to distract from the crushing multibillion dollar defeat they’ve just been dealt. First stage of grief: denial. Second: anger. Third: bargaining. We’re somewhere between 2 and 3 right now.

Nope, it’s definitely true, but sensationalism. Almost all models are trained using gpt

Open AI stole all of our data to train their model. If this is true, no sympathy.

That is what I mean, it’s a difference between an AI with robbed content in its knowledge/lenguage base and an AI assistant which only search iformation in the web to answer, linking to the corresponding pages. Way more intelligent and ethic use of an AI.